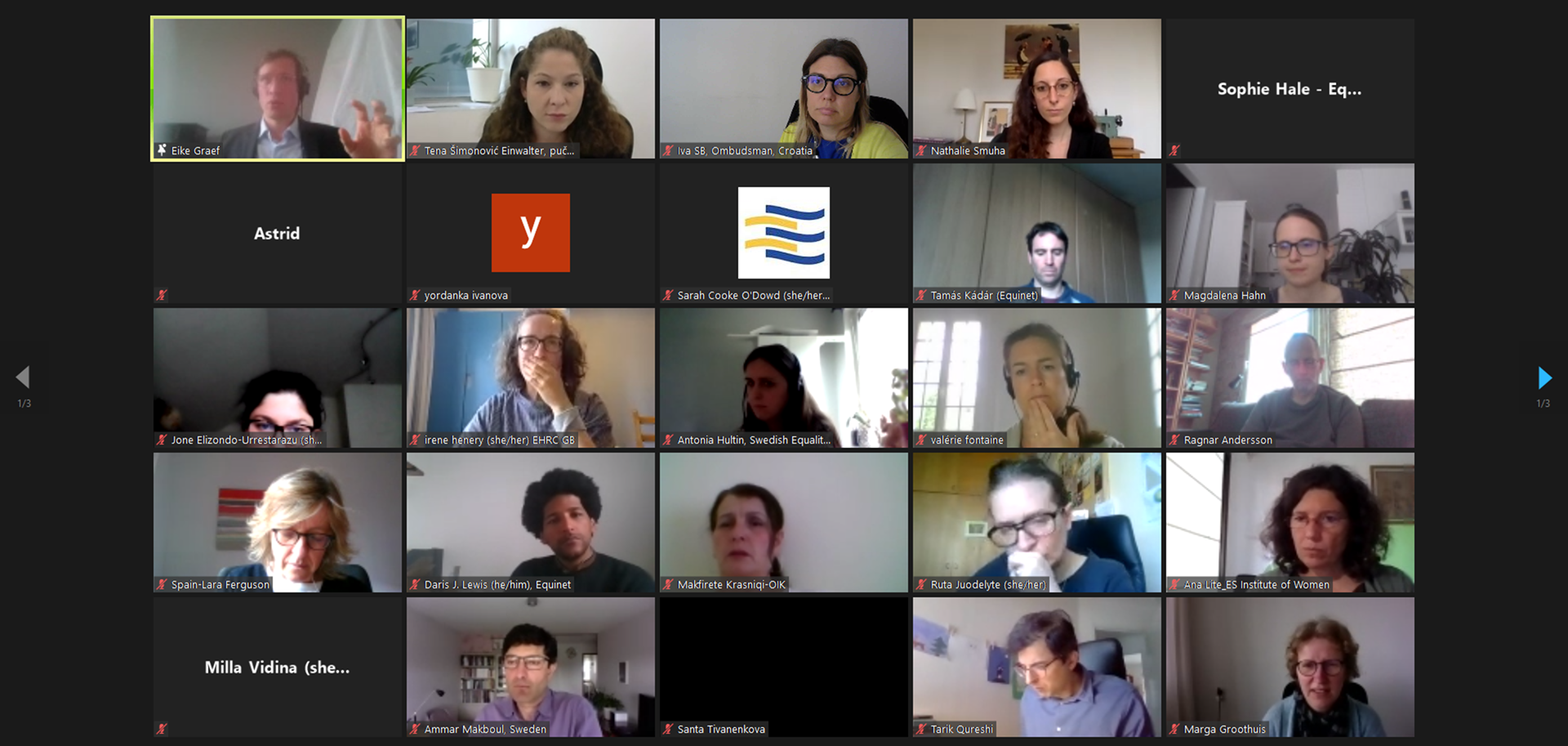

European Network of Equality Bodies (Equinet) organized on 22 and 30 April 2021 a two-part workshop on the legal and practical challenges of AI use in everyday life and its impacts on equality

In the recent years the use of AI systems has been gaining traction in many areas of human activity, creating the need for co-operation between equality bodies, the academic community and the tech companies with the aim of timely identification and correction of the possible resulting technological issues, as well as those that could lead to human rights violations or discrimination. This type of a multidisciplinary approach could help prevent the negative effects of AI implementation on individuals and various social groups.

Cooperation of Experts from Various Fields

An important role in these processes will be performed by the oversight bodies set to be established under the new AI normative regulation at the European Union level. If they fail to include experts in the area of AI equipped with the knowledge necessary to monitor and control AI systems, the citizens will not be granted comprehensive protection. In such cases, equality bodies, tasked with combating discrimination and promoting equality, will need to step in to facilitate the protection of the citizens’ rights and freedoms.

However, many citizens are still unaware of the prevalence of the use of the AI systems in various areas of life. Thus, equality bodies are still not receiving large numbers of complaints in this area, which would help them identify the problems stemming from the use of such technologies. Their implementation needs to be accompanied by human rights impact assessments, transparent and rooted in the respect for ethical principles and values. Responsibility for potential errors needs to be clearly defined and efficient legal remedies envisioned and access to them provided.

Can AI Comprehend Inequality?

Looking form the human rights and equality perspective, one of the greatest challenges in designing the AI systems that would fulfill the above mentioned criteria is the fact that they use abstract algorithms to reach their decisions, i.e. they do not possess common sense, emotions nor an awareness of the potential discriminatory effects their decisions might produce. Contextualization or, more specifically, the use of various tools to test whether the data entered into the systems are prejudiced, might provide a solution to this problem. It is important, however, to make both the methods used in such procedures as well as their results public.

An Example from Finland

Workshop participants discussed a number of concrete cases they encountered in their work in which the use of AI systems led to inequality. The examples included a credit institution from Finland which used an automated scoring model utilizing the potential clients’ sex, language, age and the place of residence as the criteria in its automated decision-making processes, which was found to have constituted direct and multiple discrimination. The court held it unacceptable to use sex and language as the criteria to reach decisions, whereas it deemed the criteria of age and place of residence partially acceptable, but stressed that their admissibility should be assessed on a case-by-case basis.

Leading Experts in AI and Equality

The event brought together the leading names in the area of AI, including professor Raja Chatila (Sorbonne University), professor Sandra Wachter (University of Oxford) and professors Janneke Gerards (Utrecht University) and Raphaële Xenidis (University of Edinburgh and University of Copenhagen), barrister Robin Allen and the European Commission representative Eike Gräf. Ombudswoman Tena Šimonović Einwalter, Equinet Chair and the ECRI representative in the Council of Europe’s Ad hoc Committee on Artificial Intelligence (CAHAI) moderated the panel on the new EU’s regulatory framework on AI and its comparison with the framework currently being developed by the Council of Europe.